DirectX Paint and WinUI 3.0

The Power of Pixel Shaders in DirectX 11

July 5th, 2023

Pixel shaders are crucial in graphics programming, particularly in DirectX 11. They determine the final color of each pixel on the screen. Think of a grid paper where each square represents a pixel. We can program a pixel shader to decide how to color each square based on certain rules. These rules can be simple, like making everything red, or complex, involving lighting, texture mapping, and more.

So, how does a pixel shader work? It operates within the graphics pipeline and receives interpolated values (calculated from vertex attributes and passed to the pixel shader) for each pixel from previous stages. It takes inputs like textures, colors, and data to calculate the final color for each pixel. The pixel shader runs independently on every pixel, working like tiny artists painting each pixel precisely.

Pixel shaders offer control over the rendering process, allowing for unique art styles or custom visual effects in games and high-performance applications. Even for 2D graphics, pixel shaders enable rich effects such as gradients, patterns, and lighting. While it is possible to bypass the pixel shader stage in certain scenarios, such as rendering simple shapes or using alternative techniques, it is a standard part of the pipeline for a reason.

Understanding HLSL: The Language of Shaders

HLSL is a shader language used in DirectX, similar to C#. Its basic data types, like int, float, and bool, are the building blocks of shader programs. Vectors and matrices are created by adding a digit to the type, such as float2, float3, and float4 for 2D, 3D, and 4D vectors. Matrices like float3x3 and float4x4 represent transformations or orientations. Variables in HLSL can be local, global, or passed between shader stages.

You'll also encounter semantics, which are specific types of variables that communicate with hardware using predefined names like POSITION, COLOR, and TEXCOORD. For instance, if you have a variable called "position" in your shader code, attaching the semantic SV_Position tells the shader it represents a vertex's position. This guides the shader in processing it correctly, such as transforming it to the appropriate screen position. Semantics can also connect variables between shader stages. In a vertex shader, you may have a variable named "color" representing vertex color. By attaching the semantic COLOR0, you indicate that this variable should be passed to the next stage, like the pixel shader, to determine the final pixel color on the screen. The number following the COLOR keyword, as in COLOR0, indicates the index of the render target to which a pixel shader is outputting. You can use multiple render targets in DirectX 11, each with its own COLOR index, from COLOR0 to COLOR7. This feature is often referred to as Multiple Render Targets (MRTs).

Shaders have control structures like if-else statements, which allow for conditional execution based on certain conditions. For instance, we can modify the color of a pixel depending on its screen position. We also have loops, such as 'for' and 'while', for repeating sections of code. Although loops are less common in shaders compared to other code types, they serve purposes like iterating through multiple lights or creating repeated patterns.

Pixel shaders reside in the rasterization stage, following vertex shading and preceding the final render target output. They take inputs, typically interpolated from the vertex shader stage. These inputs include pixel position, texture coordinates, colors, and other per-vertex data. Pixel shaders can also access textures, constant buffers, and other resources. The primary output of a pixel shader is usually the final pixel color, but it can also produce depth, stencil, or multiple render targets.

Let's consider an example of a pixel shader. It takes interpolated texture coordinates and a texture resource as inputs. The shader's task is to retrieve the color from the texture at the given coordinates, possibly apply color transformations, and then output the resulting color. Although this seems straightforward, when applied to millions of pixels concurrently, the final image can be remarkably impressive.

Texture Mapping and Sampling

Texture mapping is the process we use to put an image on a shape made of points, lines, and faces, which we call a geometric object. The image we use is called a texture.

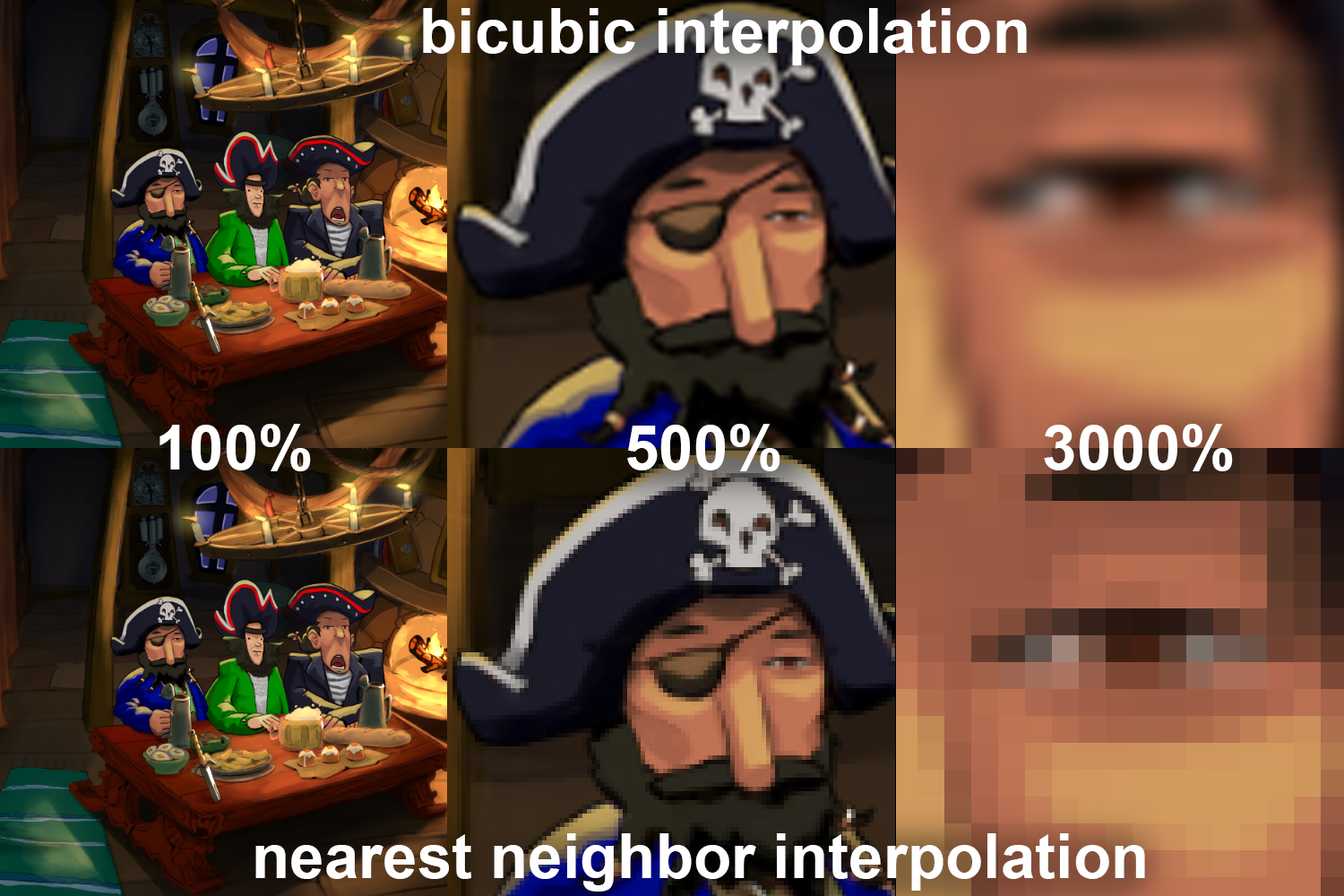

To do texture mapping, we do not put the whole image on the shape all at once. Instead, we decide what part of the image to put on each pixel of the shape. We call this process texture sampling. During texture sampling, we pick the color for a pixel by looking at the pixel's position on the texture. There are different ways we can pick this color. One way is to look at the color of the pixel in the texture that is closest to the pixel on the shape (nearest-neighbor method). Another way is to look at the colors of a few pixels around the pixel on the texture, and find a color that is a mix of these colors (interpolation methods). The way we choose to pick the color is called the texture filtering mode.

In pixel shader, we do texture sampling by getting color information from the texture. To do this, we need two important inputs: the SamplerState and the texture coordinates. The SamplerState tells us how to mix the colors of the pixels around the pixel on the texture. It also tells us what to do when the pixel on the shape does not exactly match a pixel on the texture. The texture coordinates tell us where to look on the texture to find the color for a pixel. These coordinates are usually values between 0 and 1, no matter how big or small the texture is. This makes it easier to put the texture on the shape. When we have the right SamplerState and texture coordinates, we can use them to find the right color values from the texture. The texture coordinates tell us where to look on the texture, and the SamplerState tells us how to pick the color based on the colors of the pixels around this area on the texture.

Texture2D myTexture; // Our texture resource

SamplerState mySampler; // Our sampler state

float4 MyPixelShader(float2 texCoords : TEXCOORD0) : SV_TARGET

{

// Sample the color from the texture at the given coordinates

float4 color = myTexture.Sample(mySampler, texCoords);

return color;

}In this example, texCoords is the input to our pixel shader, usually interpolated from the vertex data. We use these coordinates to sample a color from myTexture using mySampler, and that's the color we output from the pixel shader.

Arrays and Textures in Shaders

Arrays in shaders are much like arrays in any other programming language, serving as a collection of elements of the same type. These elements can be any HLSL data type, including vectors and matrices, and even user-defined structures. Here's an example of an array of four 2D vectors:

float2 myArray[4];We can access the elements of the array using their indices, just like in C#. For instance, myArray[0] would give us the first element of myArray.

Textures, on the other hand, serve a more specific purpose in HLSL. In the context of shaders, a texture is a resource that can hold data for use in our shader programs, typically image data. We can sample colors from these textures, as we saw in the previous section, and apply them to our geometry.

Declaring a texture in HLSL is straightforward as well. Here's an example of a 2D texture declaration:

Texture2D myTexture;Sampling from this texture is possible with myTexture.Sample(sampler, coords), where sampler is a SamplerState that describes how to filter the texture, and coords are the texture coordinates.

Now, how about we combine these concepts in an example? Suppose we want to blend the colors from multiple textures based on some weights. We could store our textures in an array and our weights in another array, like so:

Texture2D myTextures[4]; // Our textures

float weights[4]; // Our weights

float4 MyPixelShader(float2 texCoords : TEXCOORD0) : SV_TARGET

{

// Initialize to black

float4 color = float4(0, 0, 0, 0);

// Add up the colors from each texture, weighted by our weights

for(int i = 0; i < 4; i++)

{

color += weights[i] * myTextures[i].Sample(mySampler, texCoords);

}

return color;

}In this shader, we're sampling colors from each texture in our array, multiplying each color by its corresponding weight, and adding them all up to get our final color.

Interpolation and Built-in Functions in HLSL

Interpolation is very important when we work with shaders. Shaders deal with vertices or pixels one at a time. But sometimes, we need to use data that is spread across these points. We might know the color of two neighboring pixels, but what if we need a color between the two points? That's when we use interpolation.

In shaders, interpolation is how we figure out values at different spots based on the values we know at other spots. This is often used to guess what the color, position, or texture coordinates of a pixel should be from the data we have on the points. The GPU does this interpolation for us when it sends data from the vertex shader to the pixel shader.

Now, let's talk about built-in functions in HLSL. In terms of mathematical functions, we have basics like sin, cos, abs, and sqrt, among others. These can be used to create interesting patterns and movements. For instance, using sin or cos with time as input can make an object oscillate back and forth.

On the other hand, geometric functions can help us calculate distances, dot products, cross products, and so forth. For example, the length function can be used to find the distance from a point to the origin, which can be used to create radial gradients or circular movements.

// Oscillate the red channel of our color over time

float4 MyPixelShader(float time : TIME) : SV_TARGET

{

// Sin oscillates between -1 and 1, so we adjust it to 0-1

float red = (sin(time) + 1) / 2;

// Return a color with our oscillating red channel

return float4(red, 0, 0, 1);

}

// Create a radial gradient centered on the middle of the screen

float4 MyPixelShader(float2 position : SV_POSITION, float2 resolution : RESOLUTION) : SV_TARGET

{

float2 center = resolution / 2;

float distanceToCenter = length(position - center);

float gradient = 1 - clamp(distanceToCenter / (resolution.x / 2), 0, 1);

return float4(gradient, gradient, gradient, 1);

}In the first example, we use the sin function to make the red channel of our color oscillate over time. In the second example, we use the length function to create a radial gradient that fades out from the center of the screen.

Pixel Shaders in 2D: Basic Applications

Now that we've discussed the basics and some advanced features of pixel shaders, let's dive into some normal use cases of pixel shaders in 2D, focusing on basic applications. We'll discuss color changes, gradients, and pattern creation, providing practical examples for each.

Firstly, pixel shaders are perfect for implementing color changes. Whether you're looking to apply a color filter or perform more complex color transformations, pixel shaders are the tool for the job. For example, to turn an image into grayscale, you could average the red, green, and blue channels of each pixel:

Texture2D myTexture;

SamplerState mySampler;

float4 MyPixelShader(float2 texCoords : TEXCOORD0) : SV_TARGET

{

float4 color = myTexture.Sample(mySampler, texCoords);

float grayscale = (color.r + color.g + color.b) / 3;

return float4(grayscale, grayscale, grayscale, color.a);

}Next, we can use pixel shaders to create gradients. By varying our output color based on the position of each pixel, we can create smooth transitions between colors. Here's an example of a horizontal gradient from red to blue:

float4 MyPixelShader(float2 position : SV_POSITION, float2 resolution : RESOLUTION) : SV_TARGET

{

float t = position.x / resolution.x;

float4 color = lerp(float4(1, 0, 0, 1), float4(0, 0, 1, 1), t);

return color;

}Lastly, pixel shaders can generate patterns. By using mathematical functions, we can create a variety of patterns like stripes, checkerboards, polka dots, and more. Let's create a striped pattern:

float4 MyPixelShader(float2 position : SV_POSITION) : SV_TARGET

{

// Width of each stripe in pixels

float stripeWidth = 50;

bool isStripe = (int(position.x / stripeWidth) % 2) == 0;

// Red and blue stripes

return isStripe ? float4(1, 0, 0, 1) : float4(0, 0, 1, 1);

}Pixel Shaders in 2D: Advanced Use Cases

Moving forward, let's dive into some more advanced uses of pixel shaders in 2D, specifically lighting and post-processing effects. These use cases can elevate the look and feel of our 2D graphics, adding depth and atmosphere that enhances the overall visual experience.

Starting with lighting effects, even though we are working in 2D, we can still simulate different lighting conditions using shaders. For instance, let's consider a directional lighting effect. In this case, all we need is a direction for our light and a color. We can then calculate the dot product between the light direction and a 'normal' direction for the pixel (in 2D, this could be derived from a normal map or just be a constant value), and use this to adjust the pixel's color.

float3 lightDirection = normalize(float3(1, -1, 0)); // Top-right light

float3 lightColor = float3(1, 1, 1); // White light

float4 MyPixelShader(float2 texCoords : TEXCOORD0) : SV_TARGET

{

float4 color = myTexture.Sample(mySampler, texCoords);

float3 normal = float3(0, 0, 1); // Face up, in the Z direction

float lightIntensity = max(dot(normal, lightDirection), 0);

float3 litColor = color.rgb * lightColor * lightIntensity;

return float4(litColor, color.a);

}Post-processing effects involve processing the entire rendered image, after all the objects have been drawn. These effects include blur, sharpen, color correction, and more. As an example, let's look at how we could create a blur effect. A basic blur effect can be achieved by sampling multiple points around each pixel and averaging their colors. This will give the impression that the colors are 'spreading out', creating a blur.

float2 offsets[9] = {

float2(-1, -1), float2(0, -1), float2(1, -1),

float2(-1, 0), float2(0, 0), float2(1, 0),

float2(-1, 1), float2(0, 1), float2(1, 1)

};

float4 MyPixelShader(float2 texCoords : TEXCOORD0) : SV_TARGET

{

float4 colorSum = float4(0, 0, 0, 0);

for(int i = 0; i < 9; i++)

{

float2 offset = offsets[i] / resolution;

colorSum += myTexture.Sample(mySampler, texCoords + offset);

}

return colorSum / 9;

}In this shader, we're sampling 9 points around each pixel (including the pixel itself) and averaging their colors. This will create a blur effect as the colors 'smear' into each other.

These are just examples, but they illustrate how pixel shaders can be used to create dynamic lighting effects and post-processing effects in 2D graphics with DirectX 11.