DirectX 11 and WinUI 3.0

Camera And Transformations

June 15th, 2023

Let's continue with our look at how to render stuff with DirectX 11 inside a WinUI 3.0 window.

Updating Vertex Shader

To make changes in the view, we need to be able to update the vertex shader as the program is running. Let's assign values to our fresh matrices in a new method called Update.

private void Timer_Tick(object sender, object e)

{

Update();

Draw();

}

private void Update()

{

}This way, if there are changes to the camera, projection, or the model, we can update their states in the Update before we render the scene in the Draw. While we're at it, it's quite trivial to make the model rotate in the view, so let's do that also. We can add an angle variable in the Update method that we add to the model's rotation each frame.

float angle = 0.05f; // Change this as needed

worldMatrix = worldMatrix * Matrix4x4.CreateRotationY(angle);If you remember, the worldMatrix is there so we can modify our model. But the worldMatrix doesn't directly bind to the Mesh itself. Instead, it's used to transform the vertices of the Mesh from model space (where the vertices are defined relative to the model's origin) to world space (where the vertices are defined relative to a global, "world" origin). This transformation happens in the vertex shader. You pass the worldMatrix to the shader, which gets the vertex info through vertex buffer. We only have one model, but what if you had several? How does the vertex shader handle the data coming from the vertex buffer? Well, there are a couple of options.

1. Separate Vertex Buffers: This approach is straightforward. Each model's vertices would be put into a separate vertex buffer, and when you want to render a model, you'd bind the appropriate vertex buffer and make a draw call. This can be less memory efficient if you have many small models, but it's easier to manage.

2. Single Vertex Buffer: All vertices for all models are placed into a single, large vertex buffer. When rendering, you'd bind this one vertex buffer once, then make multiple draw calls, specifying the appropriate start index and count for each model's vertices. This can be more memory efficient, especially if you have many small models, but you need to keep track of the indices for each model's vertices in your code.

Both approaches have their uses and are common in graphics programming, so it's up to you to decide which fits your needs best. Changing the bound vertex buffer is a state change, and in graphics programming, you generally want to minimize state changes as they can have a performance impact. So if you have many models and are aiming for efficiency, the second option could be better. However, if your scene isn't too complex, the difference may not be noticeable. Each model would also have to have their own world matrix since it is the world matrix that is responsible for transforming the model's vertices from model space (where the vertices are defined relative to the model's origin) to world space (where the vertices are defined relative to a common, shared origin for the entire scene).

Ok, so how do we pass data to the vertex shader? Well, my friend, that happens via the constant buffer.

Constant Buffer

So, what is a constant buffer? It is a mechanism that allows you to pass data from your application running on the CPU to your shaders running on the GPU.

- Constant: The data in the buffer doesn't change while a single draw call is being executed. This doesn't mean the data in the buffer can't change at all. It just means that once you've launched a draw call, the data used in that draw call won't change. Between draw calls, you can change the data in the buffer as much as you want. This is what you're doing in your update loop when you update the

world,view, andprojectionmatrices and send them to the GPU via the constant buffer. - Buffer: It's a region of memory that you allocate on the GPU. When you create the buffer, you tell the GPU how much memory you need, and the GPU gives you a chunk of memory that you can write to.

So, a "constant buffer" is a chunk of GPU memory that you can write to from the CPU, and read from in a shader. The data you write to the buffer won't change while a single draw call is being processed.

This mechanism is important because the GPU can't directly access the CPU's memory, and vice versa. They each have their own separate pools of memory. So if you want to give data to a shader, you can't just pass it directly. Instead, you have to put the data in a buffer on the GPU, and then tell the shader where to find it.

This way, when you update the world, view, and projection matrices each frame, you're writing that updated data to a constant buffer. Then, in your shader code, you're reading that data from the constant buffer and using it to transform the vertices of your mesh.

Let's define a struct called ConstantBufferData that represents the data we want to pass to the shader. Make sure to use the StructLayout attribute with the LayoutKind.Sequential and Pack = 16 parameters to ensure the struct layout matches what the shader expects.

private ID3D11Buffer constantBuffer;

[StructLayout(LayoutKind.Sequential, Pack = 16)]

struct ConstantBufferData

{

public Matrix4x4 World;

public Matrix4x4 View;

public Matrix4x4 Projection;

}Pack = 16 means that each field should be aligned on a 16-byte boundary. This is important, because for constant buffers, the size of the buffer must be a multiple of 16 bytes. The struct is made up of 3 Matrix4x4 objects. Each Matrix4x4 is made up of 16 float values. Therefore, the size of your struct is 3 * 16 * 4 = 192 bytes, which of course is a multiple of 16.

Next, we create the Constant Buffer in CreateResources method.

var constantBufferDescription = new BufferDescription(Marshal.SizeOf<ConstantBufferData>(), BindFlags.ConstantBuffer);

constantBuffer = device.CreateBuffer(constantBufferDescription);We create the constant buffer only once using device.CreateBuffer() with a BufferDescription that describes the size and usage of the buffer. This buffer is created on the GPU and we'll get a handle to it (in our case constantBuffer).

Then, let's go to SetRenderState method and bind the constant buffer to a slot for the vertex shader to use. In this case, we're binding it to slot 0.

deviceContext.VSSetConstantBuffers(0, new[] { constantBuffer });We also need to initialize the worldMatrix to something. We can use Matrix4x4.Identity to set it to the defaul zero-point. So, let's go to the CreateResources method and do just that.

worldMatrix = Matrix4x4.Identity;So, remember the Update method? Let's add a few things.

private void Update()

{

float angle = 0.05f; // Change this as needed

worldMatrix = worldMatrix * Matrix4x4.CreateRotationY(angle);

ConstantBufferData data = new ConstantBufferData();

data.World = worldMatrix;

data.View = viewMatrix;

data.Projection = projectionMatrix;

deviceContext.UpdateSubresource(data, constantBuffer);

}The ConstantBufferData that we created earlier is a struct that we use to hold our world, view, and projection matrices. Let's break down the code:

ConstantBufferData data = new ConstantBufferData()creates a new instance of theConstantBufferDatastruct. So, it's not a reference to the buffer, just the data we want to send to the buffer. The struct will hold our world, view, and projection matrices.Next, we're assigning our world, view, and projection matrices to the corresponding fields in the

ConstantBufferDatastruct.UpdateSubresource(data, constantBuffer): Finally, we update the constant buffer with our new data. The shader will use it to transform the model's vertices from model space, to world space, to camera space, and finally to projection space, which determines where the vertices appear on the screen.

Speaking of the shader, we need to update it to accommodate this now flow of data. We need to declare a matching struct and a constant buffer. We then use the matrix in the shader calculations to transform vertices.

cbuffer ConstantBuffer : register(b0)

{

row_major matrix World;

row_major matrix View;

row_major matrix Projection;

float padding;

}

...

PixelInputType VS(float4 pos : POSITION)

{

PixelInputType output;

output.position = mul(mul(mul(pos, World), View), Projection);

return output;

}

The mul(mul(mul(pos, World), View), Projection) is where the transformation happens. The mul function multiplies the vertex position by the world, view, and then projection matrics. The order of multiplication matters when working with transformation matrices because these operations are not commutative. For instance, if you have an object and you first rotate it and then translate it, you'll get a different result than if you first translate it and then rotate it. The common order is World * View * Projection. This transforms the vertex from model space to world space, then to view space, and finally to projection space (ready to be rasterized to the screen).

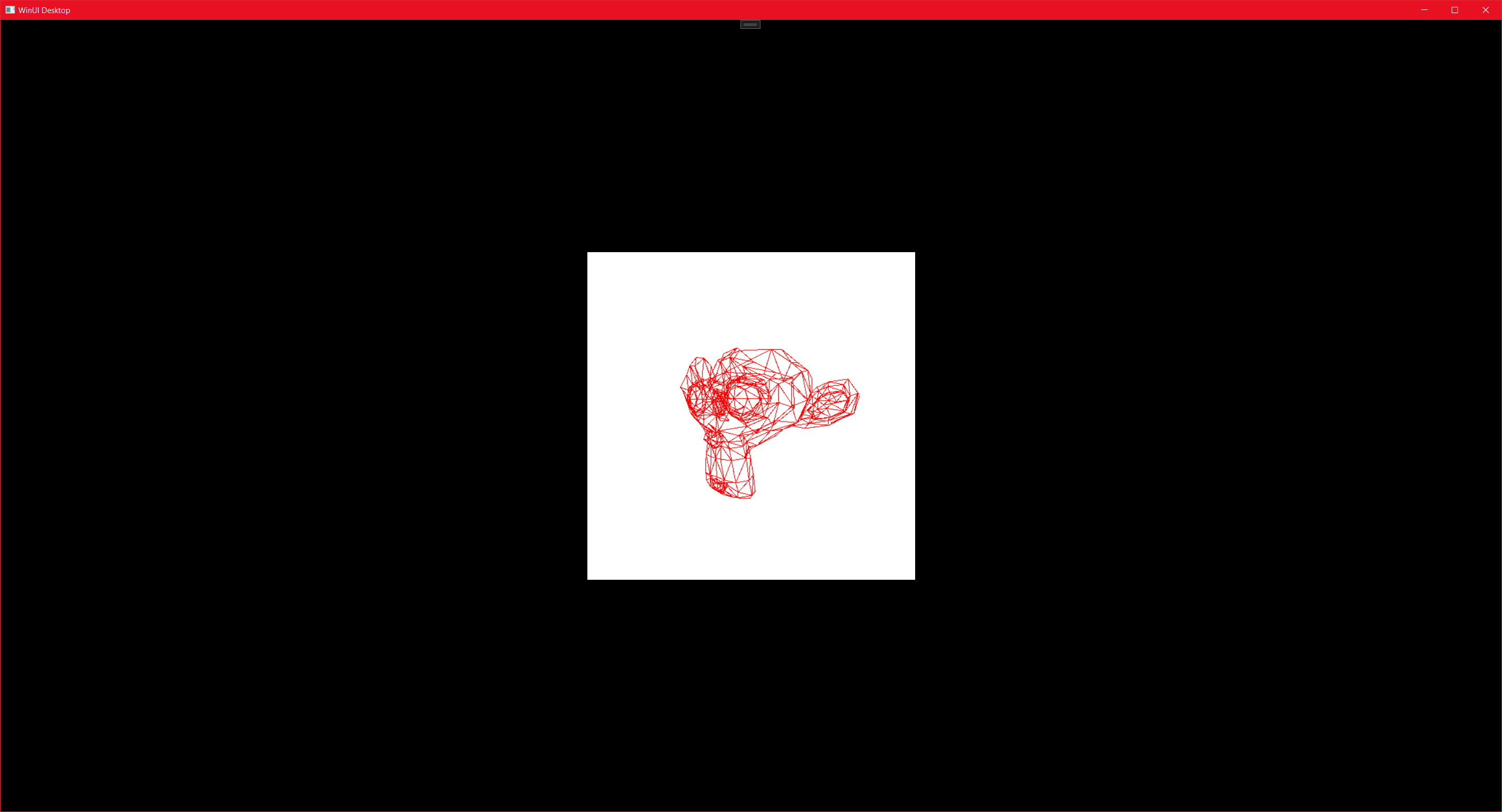

You can tweak the cameraPosition vector to get a more advantageous view of your 3D mesh. One more thing I want to ge though is lighting and shading the model. So, let's do that next.

Visual Studio project:

d3dwinui3pt8.zip (49 KB)

D3DWinUI3 part 8 in GitHub