DirectX 11 and WinUI 3.0

Phong Lighting

June 18th, 2023

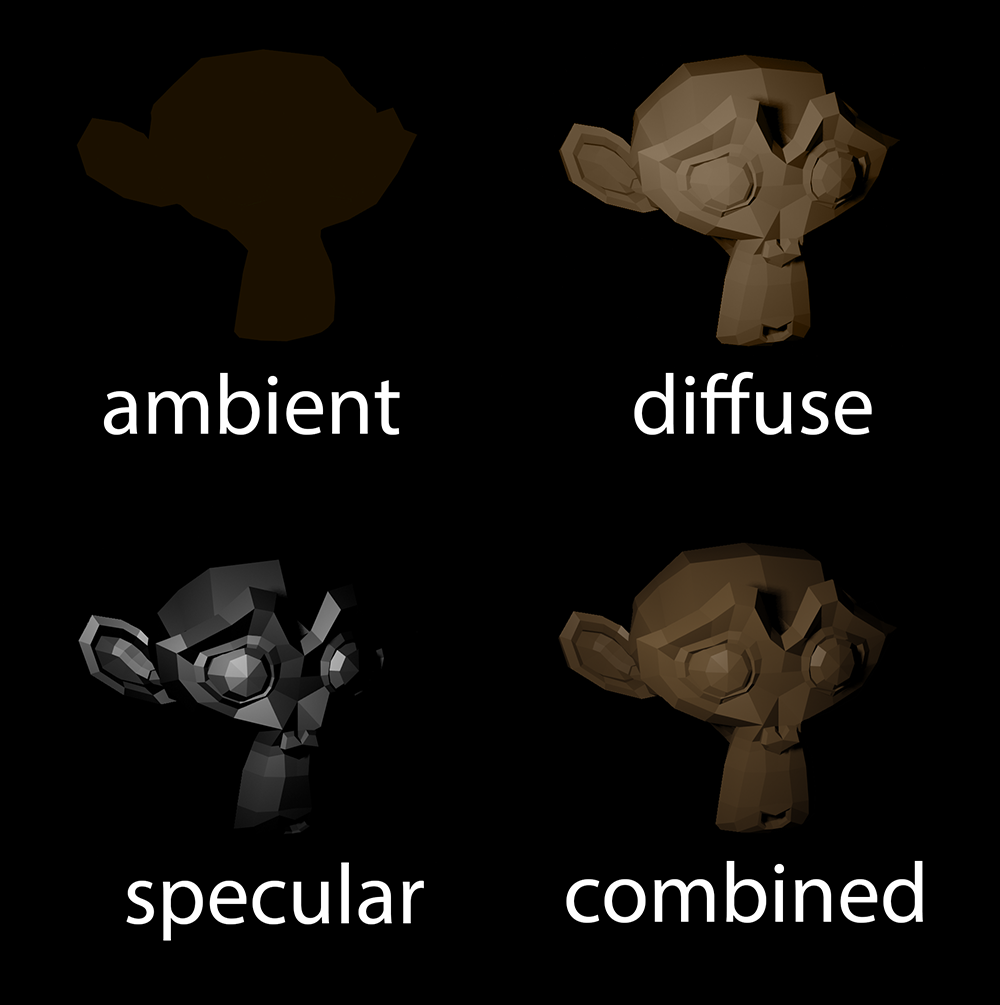

Let's continue with our look at how to render stuff with DirectX 11 inside a WinUI 3.0 window. Next, we'll try to create a simplified version of phong lighting. Phong is a combination of ambient light (constant), diffuse light, and specular highlights. We're only going to focus on doing a diffuse lighting here, but the rest can be added in after that's working.

It might be the right time to split the shaders into two files since the pixel shader is going to expand to take care of the lighting we're implementing.

public void CreateShaders()

{

string vertexShaderFile = Path.Combine(AppContext.BaseDirectory, "VertexShader.hlsl");

string pixelShaderFile = Path.Combine(AppContext.BaseDirectory, "PixelShader.hlsl");

var vertexEntryPoint = "VS";

var vertexProfile = "vs_5_0";

ReadOnlyMemory<byte> vertexShaderByteCode = Compiler.CompileFromFile(vertexShaderFile, vertexEntryPoint, vertexProfile);

var pixelEntryPoint = "PS";

var pixelProfile = "ps_5_0";

ReadOnlyMemory<byte> pixelShaderByteCode = Compiler.CompileFromFile(pixelShaderFile, pixelEntryPoint, pixelProfile);

...

}Pretty straight forward. Now, let's take a look at how we'll build up the vertex shader.

New and Improved Vertex Shader

Here's the entry point for the vertex shader called VS.

VertexOutput VS(VertexInput input)

{

VertexOutput output;

// Missing calculations

return output;

}The return type for the vertex shader is VertexOutput. So, we're going to build a VertexOutput inside the vertex shader and pass something to the pixel shader that it can use. Groovy. But what are we getting in here? The VertexInput struct. Let's map out what's inside it at the top of the file.

struct VertexInput

{

float3 position : POSITION;

float3 normal : NORMAL;

};So, we're defining what the vertex shader is expecting to get in. A vertex position, some texture coordinates, and a normal vector. We'll be using both POSITION and NORMAL. The VertexInput allows the shader to understand and process the input data correctly. So, where are we getting all this data from? The constant buffer, of course.

cbuffer ConstantBuffer : register(b0)

{

float4x4 WorldViewProjection;

float4x4 World;

float4 LightPosition;

}The constant buffer contains values that are shared among all vertices or pixels being processed by a shader. Here, we're getting whatever we passed in back in the last article. So, we have our matrices defining the info about how everything is projected on the screen and the model's transformations in the world. Additionally, we get the position for the light in the scene.

Next, let's define what properties we want the pixel shader to have once we get there.

struct VertexOutput

{

float4 position : SV_POSITION;

float3 world : POSITION0;

float3 normal : NORMAL;

};We'll want the pixel shader to get the pixel's position on the screen, the SV_POSITION. We'll want the world-space position of the pixel, that's POSITION0. And, we'll need the NORMAL of the pixel in world-space. Be aware, that this order is like an interface. Each line must be plugged in to pixel shader in the same order.

Ok, so the idea is that the vertex shader does something with the input data and then passes it to the pixel shader. We need to transform a bunch of the vertex positions from one space to another because it allows for proper positioning and orientation of 3D objects within the scene. Here's what we'll do in the VS function.

VertexOutput VS(VertexInput input)

{

float3x3 rotation = (float3x3) World;

float4 position = float4(input.position, 1.0f);

VertexOutput output;

output.position = mul(WorldViewProjection, position);

output.world = mul(World, position).xyz;

output.normal = normalize(mul(rotation, input.normal));

return output;

}float3x3 rotationturns theWorldmatrix into a 3x3 matrix calledrotation. Think ofWorldas a set of instructions that defines how an object is positioned, scaled, and rotated. By extracting the rotation component, we get a smaller matrix that only handles the object's rotation.float4(input.position, 1.0f)creates a new 4D vectorpositionusing theinput.positionvalues. This vector represents the position of a vertex (a point in 3D space) in our graphics object.mul(WorldViewProjection, position)transforms thepositionvector by multiplying it with theWorldViewProjectionmatrix. This combination of matrices makes sure we're taking into account the object's initial position and scaling factors, the camera's position and orientation, and the correct representation of depth and perspective for the final transformation that takes the vertex from its local coordinate space to its position on the screen.mul(World, position).xyztransforms thepositionvector using theWorldmatrix to get the vertex's position in the world coordinate space. The.xyzextracts the 3D position (ignoring the fourth dimension, which is typically used for homogeneous coordinates).normalize(mul(rotation, input.normal))transform theinput.normalvector (representing the surface normal of the vertex) by multiplying it with therotationmatrix. This applies the same rotation transformation, that is applied to the vertex position, to thenormalvector. Thenormalizefunction ensures that the normal vector has a length of 1, which is important for lighting calculations.

Each vertex in a 3D model is originally defined in its local coordinate system called object space. In object space, the vertices are relative to the object's origin and have no knowledge of their position or orientation in the world. To position and orient objects in the 3D world, we need to transform the vertices from object space to world space. This transformation applies translations, rotations, and scalings specified by the world matrix (World). It allows us to place the object at a specific position, rotate it, or scale it uniformly or non-uniformly.

By transforming all the vertices of an object from object space to world space, we ensure that all the vertices are aligned correctly within the same coordinate system. This is important for maintaining consistency in the scene and allowing objects to interact with each other accurately. Transforming the vertices to world space is essential for performing lighting calculations accurately. Lighting calculations require the positions and normals of the vertices in world space to determine how light interacts with the object's surface.

The Pixel Shader

To prepare for lighting, in addition to the vertex shader, we also need to pass the constant buffer data to the pixel shader.

public void SetRenderState()

{

...

deviceContext.PSSetShader(pixelShader, null, 0);

deviceContext.PSSetConstantBuffer(0, constantBuffer);

}And as with the vertex shader, we want to access the constant buffer in the hlsl file.

cbuffer ConstantBuffer : register(b0)

{

float4x4 WorldViewProjection;

float4x4 World;

float4 LightPosition;

}After that, let's define what's coming in from the vertex shader. And remember, these have to match the output of the vertex shader.

struct PixelInput

{

float4 position : SV_POSITION;

float3 world : POSITION0;

float3 normal : NORMAL;

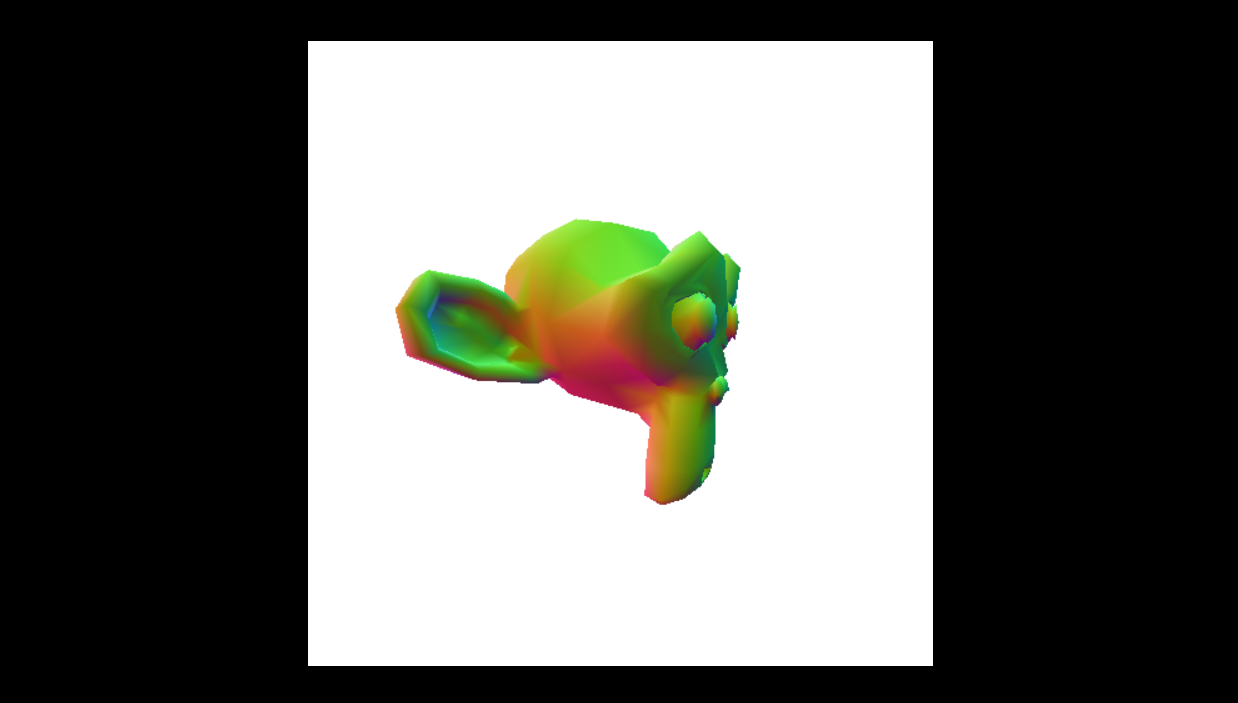

};Then, we can tackle the entry function for the pixel shader called PS. Let's just visualize the normals of the model so we can see that everything's working so far.

float4 PS(PixelInput input) : SV_TARGET

{

return float4((input.normal * 0.5f) + 0.5f, 1.0f);

}So, the pixel shader function takes the structure PixelInput as input and returns a float4 value, which represents the color of the pixel. The SV_TARGET semantic indicates that the value will be used as the final output color of the pixel. The function computes the final color by performing a calculation on the input's normal property.

input.normalrepresents the normal vector of the surface at the pixel being shaded. The normal vector defines the direction the surface is facing.(input.normal * 0.5f) + 0.5fmodifies the normal vector by scaling it and shifting it, effectively remapping the range of the normal values. This is done to convert the normal vector from its original range (-1 to 1) to the range (0 to 1), which is suitable for representing colors.- The resulting vector

(input.normal * 0.5f) + 0.5fis then used as the RGB components of the final color. Each component of the vector is mapped to the respective color channel (red, green, blue) of the pixel. The values are clamped between 0 and 1.

You might have noticed that most values we deal with are placed in the range from 0 to 1. That's just how 3D programming is. For most numerical ranges, the minimum value is 0 and the maximum value is 1. By normalizing values to the range of 0 to 1, it becomes easier to perform calculations and apply operations uniformly. Also, representing values within a normalized range helps avoid floating point precision issues and keeps computations within a manageable range for the hardware.

Input Layout

The vertex shader is expecting a certain layout for the data it's getting. Since we specified POSITION and NORMAL in the VertexInput, we need to make sure that the input layout matches that. So let's change it a bit.

InputElementDescription[] inputElements = new InputElementDescription[]

{

new InputElementDescription("POSITION", 0, Format.R32G32B32_Float, 0, 0),

new InputElementDescription("NORMAL", 0, Format.R32G32B32_Float, 12, 0)

};

inputLayout = device.CreateInputLayout(inputElements, vertexShaderByteCode.Span);Note that the offset keeps incrementing based on the previous element. The POSITION has three elements (R32, G32, and B32), so the offset for NORMAL is 3 times 4 (one byte being 8 bits, so 32 bits are 4 bytes). If we had another element after this (such as TEXCOORD), we'd take the size of the NORMAL (again, 12 bytes) and add that to the offset, making it 24.

If we run the application now, we can see pretty colors rendered on our 3D model.

So, why does this turn the model to green, red, and blue? The pixel shader is using the world-space normal vector of each pixel as the basis for the color. So, the red, green, and blue components of the color represent the X, Y, and Z components of the surface normal vector, respectively. The normal vector describes the direction the surface is facing at each point.

With our shader, when the normal vector has a positive X-component, it will contribute to the red color channel. When the normal vector has a positive Y-component, it will contribute to the green color channel. And when the normal vector has a positive Z-component, it will contribute to the blue color channel.

Diffuse Light

Alright, enough of the dog-and-pony show. Let's render some light on the screen.

float4 PS(PixelInput input) : SV_TARGET

{

float3 normal = input.normal;

float3 lightDir = normalize(LightPosition.xyz - input.world.xyz);

float NdotL = max(0, dot(normal, lightDir));

float4 color = float4(NdotL, NdotL, NdotL, 1.0f);

return color;

}normalize(LightPosition.xyz - input.world.xyz)calculates the direction from the surface point towards the light source. It subtracts the position of the light source from the position of the surface point to determine the direction vector. The normalize function ensures that the direction vector has a length of 1.max(0, dot(normal, lightDir))calculates the amount of light hitting the surface by taking the dot product of the surface normal vector and the light direction vector. The dot product measures the alignment between the two vectors. If the vectors are pointing in the same direction (surface facing the light), the result is positive. If they are pointing in opposite directions (surface facing away from the light), the result is negative. The max(0, ...) ensures that we consider only positive values, as negative values mean the surface is facing away from the light.float4(NdotL, NdotL, NdotL, 1.0f)creates a color based on the amount of light hitting the surface (NdotL). The float4 represents a color with red, green, blue, and alpha channels. By setting all three RGB channels to NdotL, we create a grayscale color where brighter areas represent more light hitting the surface. The alpha channel is set to 1.0f, indicating full opacity.

And there you have it. Dynamic diffuse lighting for your favorite monkey head. I went ahead and also made a few controls so that you can adjust the light while the application is running.

XAML UI

Let's implement some X, Y, and Z sliders in the XAML file.

<Grid Width="500">

<Grid.RowDefinitions>

<RowDefinition Height="Auto" />

<RowDefinition Height="Auto" />

<RowDefinition Height="Auto" />

<RowDefinition Height="Auto" />

</Grid.RowDefinitions>

<SwapChainPanel Grid.Row="0" x:Name="SwapChainCanvas" Width="500" Height="500" Loaded="SwapChainCanvas_Loaded"></SwapChainPanel>

<TextBlock Grid.Row="1" Text="X:" />

<TextBlock Grid.Row="2" Text="Y:" />

<TextBlock Grid.Row="3" Text="Z:" />

<Slider Grid.Row="1" Minimum="-10" Maximum="10" Width="450" ValueChanged="SliderX_ValueChanged" />

<Slider Grid.Row="2" Minimum="-10" Maximum="10" Width="450" ValueChanged="SliderY_ValueChanged" />

<Slider Grid.Row="3" Minimum="-10" Maximum="10" Width="450" ValueChanged="SliderZ_ValueChanged" />

</Grid>You'll notice that I replaced the StackPanel with a more versatile grid. All the sliders are routed to their personal event when the value is changed. Here's the code-behind.

float lightX = 0.0f; // -10 right, 10 left

float lightY = 0.0f; // -10 down, 10 up

float lightZ = 0.0f; // -10 near, 10 far

Vector3 lightPosition;

private void SliderX_ValueChanged(object sender, RangeBaseValueChangedEventArgs e)

{

lightX = (float)e.NewValue;

}

private void SliderY_ValueChanged(object sender, RangeBaseValueChangedEventArgs e)

{

lightY = (float)e.NewValue;

}

private void SliderZ_ValueChanged(object sender, RangeBaseValueChangedEventArgs e)

{

lightZ = (float)e.NewValue;

}Pretty straightforward. In each event we assign the new value to a global variable.

lightPosition = new Vector3(lightX, lightY, lightZ);Once in the Update method, we change the lightPosition vector to match the new values. And that's that. Now you can control the light in the WinUI 3 Window.

That's it for the 3D part of the DirectX tutorial, at least for the time being. Hope this gives you a good jumping-off point if you want to pursue the endeavor further.

Visual Studio project:

d3dwinui3pt10.zip (50 KB)

D3DWinUI3 part 10 in GitHub